Kubernetes for Databases: Should You Run PostgreSQL, MySQL, and MongoDB on K8s?

The push to containerize everything has reached the final frontier: the stateful database. For years, the conventional wisdom was simple: “Never run your database on Kubernetes.“

But is that still true?

As Kubernetes (K8s) solidifies its position as the de facto operating system for the cloud, the question has shifted from if you can run a database on it to when and how you should.

The promise is incredibly seductive: unified deployment, management, and scaling for your entire application stack, from stateless microservices to stateful data stores. But the path is fraught with complexity that can quickly turn your database into a liability.

Let’s cut through the hype and fear-mongering. We’ll break down the real challenges, the concrete benefits, and the absolute best practices for running production-grade databases on Kubernetes.

The Great Debate: Why It’s So Controversial

Running a database on K8s is fundamentally different from running a stateless app. The stakes are infinitely higher. Here’s the core of the debate:

The Case AGAINST:

- State is Hard: Kubernetes was originally designed for stateless workloads. Databases are the epitome of statefulness. Managing persistent data that must survive pod rescheduling, failures, and upgrades is complex.

- Performance Concerns: Databases often require low-latency, high-throughput access to storage and network. The virtualization layers in K8s can introduce overhead and unpredictable I/O latency.

- Operational Complexity: You’re replacing the well-understood operational playbooks of virtual machines with complex Kubernetes abstractions (StatefulSets, PersistentVolumes, Operators). A misconfiguration can lead to data loss.

- “It’s a Rube Goldberg Machine”: Critics argue you’re using a complex system (K8s) to manage another complex system (a database), when a VM or a managed cloud database service is often simpler and more robust.

The Case FOR:

- Unified DevOps & GitOps: Manage your entire stack—app logic, config, and data—with the same tools (kubectl, Helm, ArgoCD). Your database deployments become declarative, version-controlled, and part of your CI/CD pipeline.

- Consistency and Portability: The same Kubernetes manifests can run on AWS, GCP, Azure, or on-premises, reducing vendor lock-in and simplifying disaster recovery strategies.

- Automated Operations: Kubernetes Operators can automate complex database tasks like backups, failover, scaling, and upgrades, potentially reducing toil for your Ops team.

- Cloud-Native Architecture: For truly distributed, cloud-native applications, having the database co-located in the same cluster can reduce latency and simplify service discovery.

The #1 Rule: Never Use Deployments for Databases

This is the most critical best practice. You must use a StatefulSet.

- Deployment: Pods are fungible and interchangeable. They get random names and can be rescheduled anywhere. This is chaos for a database that needs a stable identity and persistent, sticky storage.

- StatefulSet: Pods are created in a sequential, predictable order (postgres-0, postgres-1, etc.). Each pod gets a unique, stable network identity and its own dedicated PersistentVolumeClaim (PVC) that sticks to it, even if it’s rescheduled to a different node.

Example PVC for a StatefulSet:

yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: data-postgres-0 # Unique for each pod

spec:

accessModes:

- ReadWriteOnce

storageClassName: ssd-high-iops # Critical for performance

resources:

requests:

storage: 100Gi

Essential Best Practices for Running Databases on K8s

If you’ve decided to proceed, here’s how to do it right. Ignoring these is a direct path to data loss and downtime.

1. Storage is Everything: Choose Your StorageClass Wisely

Your database is only as good as its storage. The default storage class in your cloud is usually not sufficient.

- Use a High-Performance StorageClass: Provision a dedicated

StorageClassusing SSDs with guaranteed high IOPS (Input/Output Operations Per Second). On AWS, this might beio2; on GCP,pd-ssd. - Understand Access Modes: For a primary-replica database, you’ll need

ReadWriteOnce(RWO) for each pod’s volume. For a shared-nothing architecture, this is perfect. - Reclaim Policy: Set the

persistentVolumeReclaimPolicytoRetainto prevent automatic deletion of PVs when a PVC is deleted. This is a crucial safety net against accidental data deletion.

2. Embrace Kubernetes Operators: Don’t Roll Your Own

An Operator is a method of packaging, deploying, and managing a Kubernetes application. For databases, they are non-negotiable for production use.

An Operator encodes the operational knowledge of a database administrator (DBA) into software that can automate tasks inside K8s.

- PostgreSQL: Use the CloudNativePG Operator or Crunchy Data Postgres Operator. They handle failover, backups, point-in-time recovery, and cloning.

- MySQL: Use the Oracle MySQL Operator or Presslabs MySQL Operator (Vitess).

- MongoDB: Use the MongoDB Community Kubernetes Operator or the enterprise version from MongoDB.

Example of what an Operator simplifies (Automated Backups):

yaml

# Example from CloudNativePG Operator

apiVersion: postgresql.cnpg.io/v1

kind: Cluster

metadata:

name: my-postgres-cluster

spec:

instances: 3

storage:

size: 100Gi

backup:

barmanObjectStore:

destinationPath: "s3://my-bucket/backups/"

s3Credentials:

accessKeyId:

name: aws-credentials

key: ACCESS_KEY_ID

secretAccessKey:

name: aws-credentials

key: SECRET_ACCESS_KEY

retentionPolicy: "30d" # Automated cleanup

3. Configure Robust Liveness and Readiness Probes

Kubernetes uses probes to know if your pod is healthy. For a database, generic HTTP checks won’t cut it.

- Use Custom Commands: Your probes should execute a command inside the container that checks the database process’s health deeply.yamllivenessProbe: exec: command: – /bin/sh – “-c” – ‘pg_isready -h localhost -p 5432 -U postgres’ # Example for PostgreSQL initialDelaySeconds: 30 periodSeconds: 10 readinessProbe: exec: command: – /bin/sh – “-c” – ‘pg_isready -h localhost -p 5432 -U postgres’ initialDelaySeconds: 5 periodSeconds: 5

4. Master Resource Requests and Limits

Databases are resource-hungry. Failing to define limits can lead to a “noisy neighbor” scenario where your database pod starves other critical workloads—or gets killed by the kernel.

- Be Specific and Generous: Always set

requestsandlimitsfor CPU and memory. The database should have a guaranteed amount of resources.yamlresources: requests: memory: “8Gi” cpu: “2” limits: memory: “8Gi” # Avoid memory swap at all costs for DB performance cpu: “4”

5. Plan for Disaster: Backups and Beyond

Your backup strategy must be Kubernetes-aware. Simply backing up the PVC volume snapshot is not enough.

- Use Operator-Based Backups: As shown above, leverage the Operator’s native backup functionality to perform consistent, logical backups to object storage (S3, GCS).

- Test Your Restores Religiously: A backup is useless until you’ve proven you can restore from it. Automate and regularly schedule restore drills to a separate namespace.

Database-Specific Considerations

- PostgreSQL: Well-suited for K8s. Operators are mature. Use Patroni (embedded in many operators) for robust leader election and failover.

- MySQL: More complex due to its replication model. Using an Operator like Vitess can abstract this complexity and provide better sharding capabilities.

- MongoDB: The MongoDB Operator manages replica sets well. Be mindful of the overhead of its sidecar containers for automation.

So, Should You Do It? The Verdict.

Yes, run your database on Kubernetes if:

- You have strong in-house Kubernetes expertise.

- You are all-in on a GitOps workflow and want a unified deployment model.

- You need ultimate portability across clouds.

- You’re using Operators to automate complex database operations.

- Your application is already designed as a cloud-native, distributed system.

No, use a managed cloud database or VMs if:

- Your top priority is pure performance and predictable latency.

- Your team lacks deep Kubernetes and database administration skills.

- “It just works” and operational simplicity are more important than architectural purity.

- Your compliance requirements are easier to meet with a traditional managed service (e.g., AWS RDS, Google Cloud SQL, Azure Database).

The Bottom Line

Running databases on Kubernetes is no longer a forbidden practice—it’s an advanced pattern that requires careful planning, the right tools (especially Operators), and a deep understanding of both K8s and database internals.

The goal isn’t to chase trends, but to choose the architecture that best serves your application’s needs and your team’s skills. For many, a hybrid approach—stateless apps on K8s and databases on managed services—remains the most pragmatic and powerful choice.

FAQ Section

Q: Is running a database on Kubernetes production-ready?

A: Yes, but with major caveats. It is production-ready if you use the correct resources (StatefulSets, high-performance storage) and, most importantly, a mature Kubernetes Operator for your specific database. Going without an Operator is not recommended for production.

Q: How does performance compare to VMs or bare metal?

A: There is inherent overhead due to network abstraction and containerization. For most applications, this overhead is negligible, especially with dedicated storage and proper resource limits. For ultra-low-latency, high-frequency trading, or massive OLAP workloads, bare metal or dedicated VMs might still have an edge.

Q: What about data persistence if the cluster goes down?

A: This is why storage is decoupled. Your PersistentVolumes (PVs) are backed by cloud storage (e.g., AWS EBS, GCP PD) which exists independently of your cluster. If your entire cluster fails, when you rebuild it, you can reclaim the existing PVs and their data, as long as the reclaimPolicy is set to Retain.

Q: Can I run a single-instance database on Kubernetes?

A: Technically, yes. A StatefulSet with a single replica is fine for development and testing environments. However, for production, you should always run with at least three replicas for high availability. The primary value of K8s for databases is managing these complex, distributed setups.

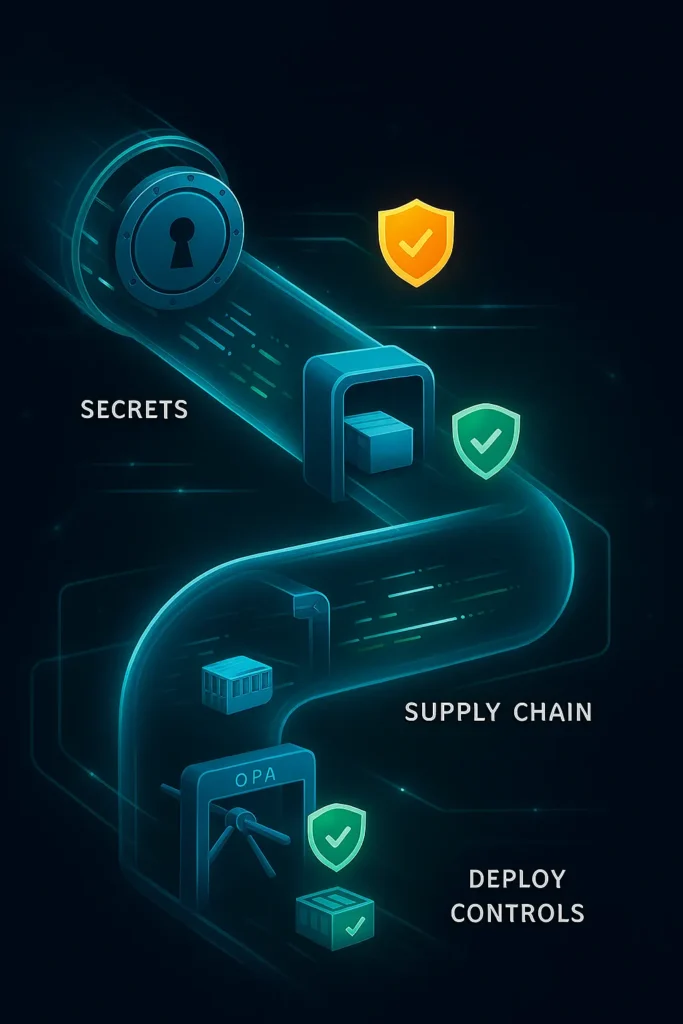

Q: Are there any security concerns specific to databases on K8s?

A: Absolutely. You must:

- Use Secrets (not ConfigMaps) for sensitive database credentials.

- Run your database pods under a dedicated, non-root service account with minimal permissions.

- Use network policies to restrict traffic to the database pods so only your application pods can talk to them.

- Ensure your storage volumes are encrypted at rest.

No post found!